|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

|

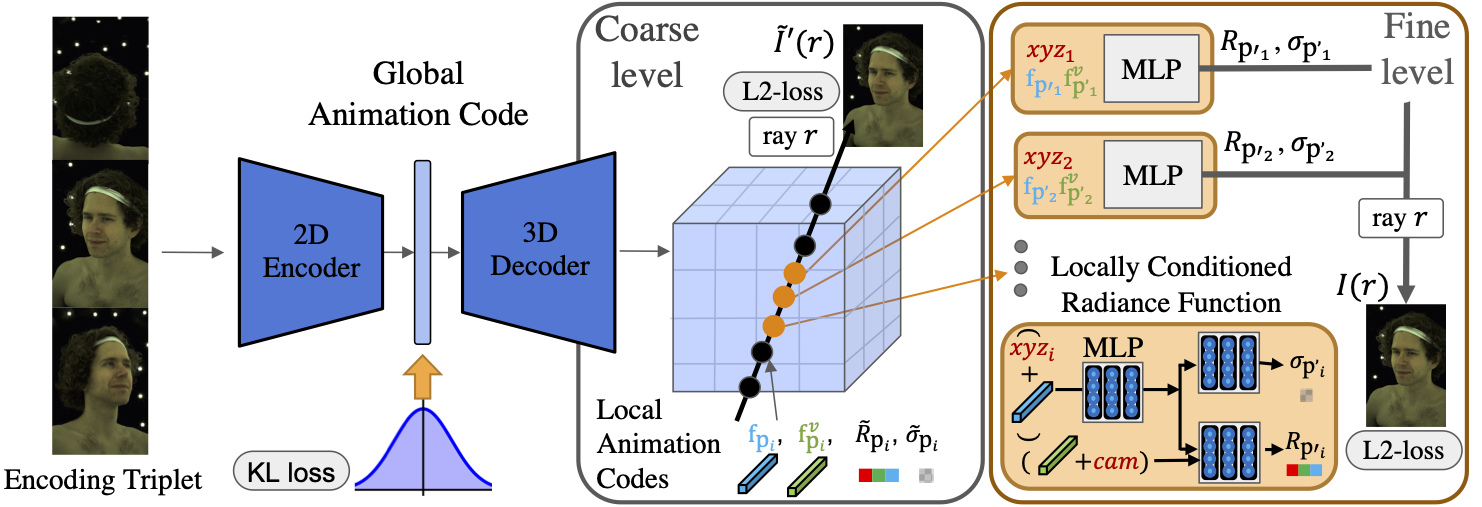

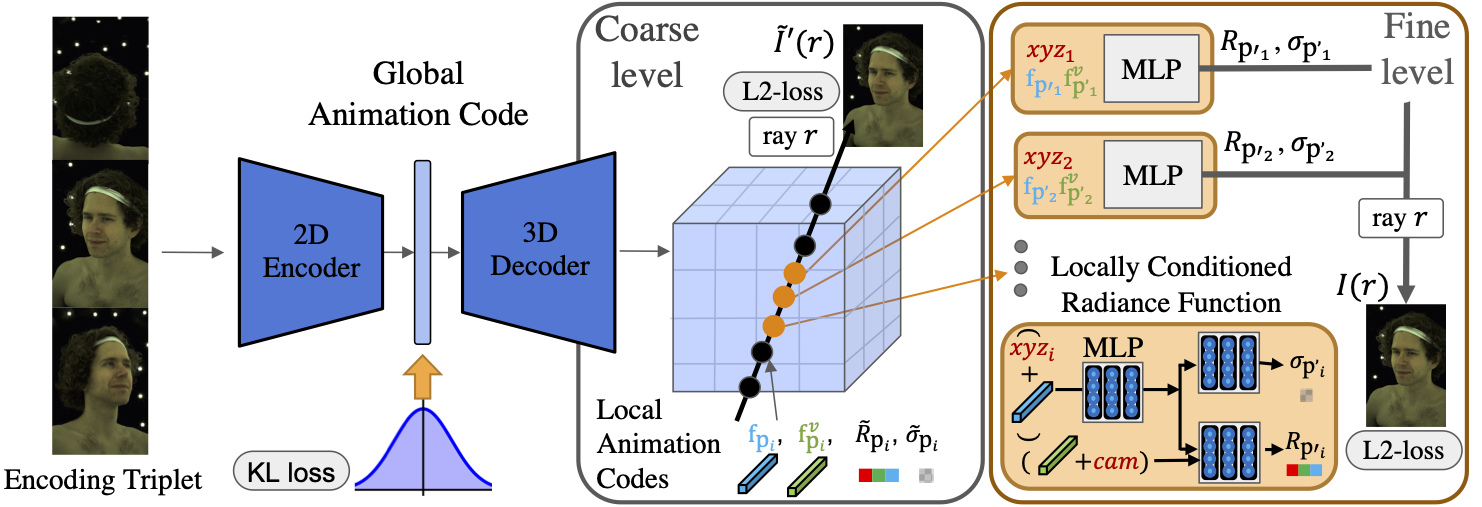

Photorealistic rendering of dynamic humans is an important ability for telepresence systems, virtual shopping, synthetic data generation, and more. Recently, neural rendering methods, which combine techniques from computer graphics and machine learning, have created high-fidelity models of humans and objects. Some of these methods do not produce results with high-enough fidelity for driveable human models (Neural Volumes) whereas others have extremely long rendering times (NeRF). We propose a novel compositional 3D representation that combines the best of previous methods to produce both higher-resolution and faster results. Our representation bridges the gap between discrete and continuous volumetric representations by combining a coarse 3D-structure-aware grid of animation codes with a continuous learned scene function that maps every position and its corresponding local animation code to its view-dependent emitted radiance and local volume density. Differentiable volume rendering is employed to compute photo-realistic novel views of the human head and upper body as well as to train our novel representation end-to-end using only 2D supervision. In addition, we show that the learned dynamic radiance field can be used to synthesize novel unseen expressions based on a global animation code. Our approach achieves state-of-the-art results for synthesizing novel views of dynamic human heads and the upper body. |

|

Z. Wang, et al. Learning Compositional Radiance Fields of Dynamic Human Heads |

|

Free view rendering of three human heads. We show the talking head under different camera poses and expressions. |

|

We show results for Neural Volumes (NV) in the first column and results for temporal NeRF in the second. The third column is our result, and the fourth is the ground truth image for a fixed viewpoint. Note that we show the ground truth image from a fixed viewpoint as we do not have ground truth for arbitrary camera poses. |

|

We show free view rendering results of several expressions sampled from our latent space. |

|

We show animation results by performing linear interpolation of several sampled expressions. For each video, we first sample 12 latent codes to generate the keyframes. Than, we generate 10 more frames between every pair of keyframes by linear interpolation in the latent space.

|

|

Here we show two videos of driving the animation using a set of 2D keypoints. The first one is generated from the model on a novel sequence and the seconde one is from the model with encoder-only finetuning on the same sequence. The leftmost column (with a blue border) serves as the input. The second and third column show the prediction and the ground truth respectively. |

|

We first test our model on a novel sequence without any finetuning. The leftmost column (with a red border) are the inputs to the model. The second and third column show the prediction and the ground truth respectively. |

|

Here we show results of our model on the same sequence with encoder-only finetuning. The leftmost column (with a red border) shows the inputs to the model. The second and third column show the prediction and ground truth.

|